Exploring 3D Interaction with Gaze Guidance in Augmented Reality

IEEEVR-2023

Yiwei Bao, Jiaxi Wang, Zhimin Wang,, and Feng Lu

Abstract

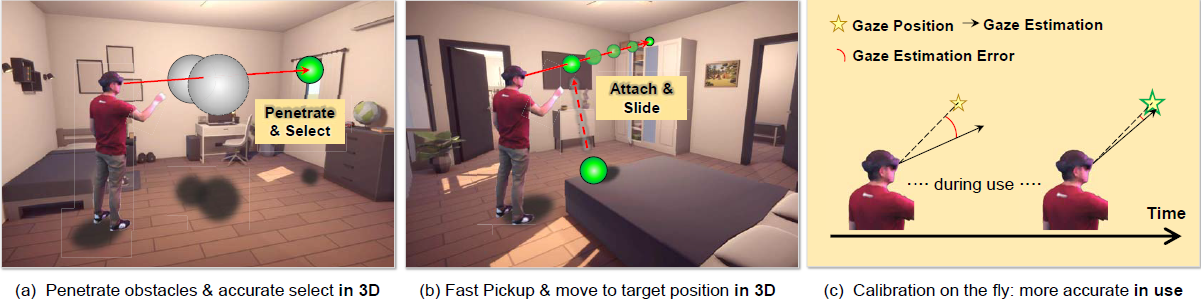

Recent research based on hand-eye coordination has shown that gaze could improve object selection and translation experience under certain scenarios in AR. However, several limitations still exist. Specifically, we investigate whether gaze could help object selection with heavy 3D occlusions and help 3D object translation in the depth dimension. In addition, we also investigate the possibility of reducing the gaze calibration burden before use. Therefore, we develop new methods with proper gaze guidance for 3D interaction in AR, and also an implicit online calibration method. We conduct two user studies to evaluate different interaction methods and the results show that our methods not only improve the effectiveness of occluded objects selection but also alleviate the arm fatigue problem significantly in the depth translation task. We also evaluate the proposed implicit online calibration method and find its accuracy comparable to standard 9 points explicit calibration, which makes a step towards practical use in the real world.

Related Work

Our related work:

Gaze-Vergence-Controlled See-Through Vision in Augmented Reality

Edge-Guided Near-Eye Image Analysis for Head Mounted Displays