GazeRing: Enhancing Hand-Eye Coordination with Pressure Ring in Augmented Reality

IEEE ISMAR conference paper, 2024.

Zhimin Wang, and Jingyi Sun, and Mingwei Hu, and Maohang Rao, and Weitao Song, and Feng Lu

Abstract

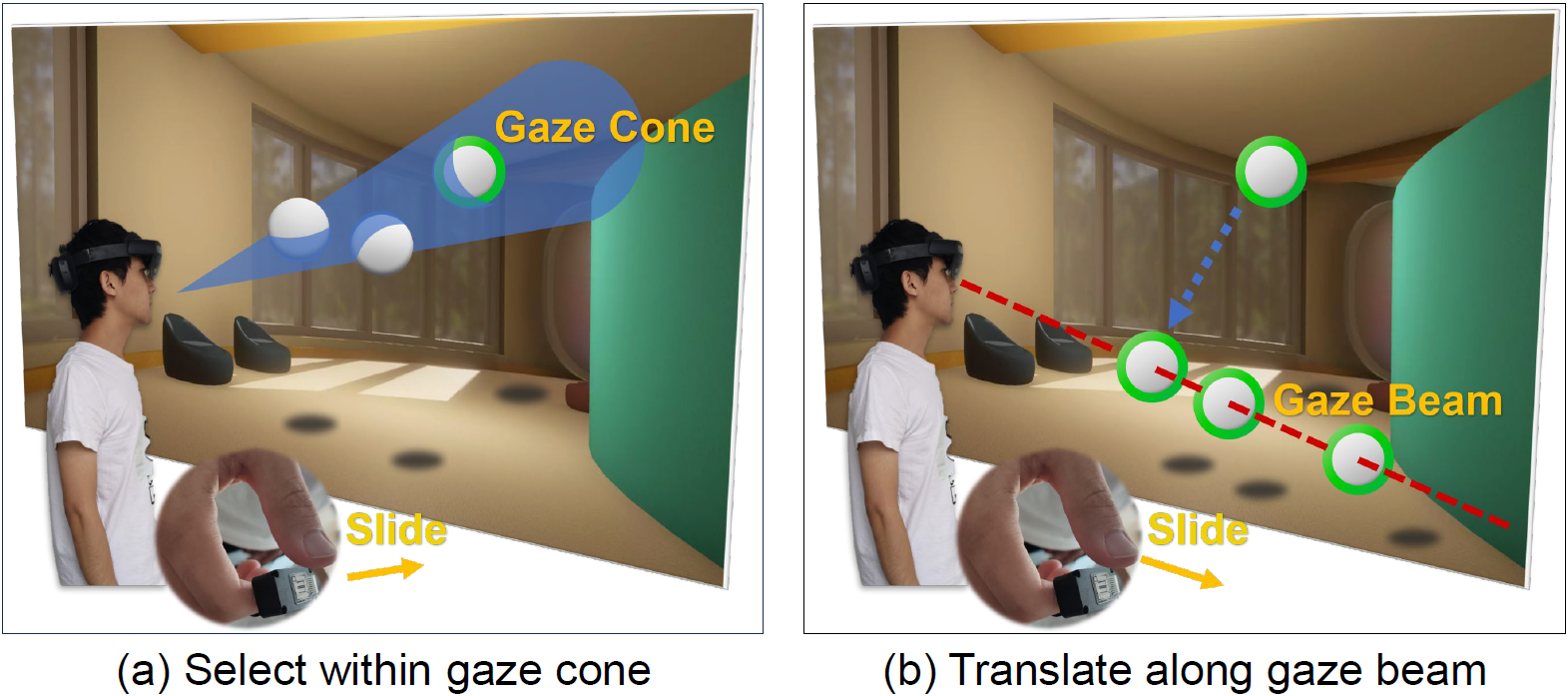

Hand-eye coordination techniques find widespread utility in augmented reality and virtual reality headsets, as they retain the speed and intuitiveness of eye gaze while leveraging the precision of hand gestures. However, in contrast to obvious interactive gestures, users prefer less noticeable interactions in public settings due to concerns about social acceptance. To address this, we propose GazeRing, a multimodal interaction technique that combines eye gaze with a smart ring, enabling private and subtle hand-eye coordination while allowing users’ hands complete freedom of movement. Specifically, we design a pressure-sensitive ring that supports sliding interactions in eight directions to facilitate efficient 3D object manipulation. Additionally, we introduce two control modes for the ring: finger-tap and finger-slide, to accommodate diverse usage scenarios. Through user studies involving object selection and translation tasks under two eye-tracking accuracy conditions, with two degrees of occlusion, GazeRing demonstrates significant advantages over existing techniques that do not require obvious hand gestures (e.g., gaze-only and gaze-speech interactions). Our GazeRing technique achieves private and subtle interactions, potentially improving the user experience in public settings.

Presentation Video:

Supplymental Video:

Related Work

Our related work:

Gaze-Vergence-Controlled See-Through Vision in Augmented Reality

Interaction With Gaze, Gesture, and Speech in a Flexibly Configurable Augmented Reality System

Edge-Guided Near-Eye Image Analysis for Head Mounted Displays